TCP Offload Engine

TCP Offload engine – Offloading TCP into hardware

Many applications in industry can benefit from Ethernet and TCP/IP as it is a well-known and supported networking standard. More and more, these industrial applications require higher bandwidth and lower latency.

This means that it is becoming a challenge not to overload the CPU with a TCP/IP stack running at maximum bandwidth. These increasing requirements make the processor spend more time handling data rather than running your application.

easics’ TCP Offload Engine (TOE) can be used to offload the TCP/IP stack from the CPU and handle it in FPGA or ASIC hardware. This core is an all-hardware configurable IP block. It acts as a TCP server for sending and receiving of TCP/IP data. Because everything is handled in hardware very high throughput and low latency are possible. The IP block is completely self-sufficient and can be used as a black box module which takes care of all networking tasks. This means that the rest of the system is free to use its processing power purely for application logic. In some use cases, integrating our full-hardware TCP/IP stack eliminates the need for any built-in embedded processor at all.

The easics TCP Offload Engine is available as a 1 Gbit/s or 10 Gbit/s version. Both versions support Ethernet packets, IP packets, ICMP packets for ping, TCP packets and ARP packets. The 10 Gbit/s version additionally supports pause frames.

IP Core Architecture

The figure below shows the core’s building blocks and its four most important interfaces.

The first of these is an industry-standard (X)GMII interface which communicates with a 1(0) Gbit PHY. The second is situated on the application side: two FIFOs with a simple push/pop interface, one for RX and one for TX.

These FIFO interfaces, as well as an internal TCP block, communicate with a memory system which is to be provided outside of the core (the third interface). The size and type of memory can be selected by the user. ARM’s AMBA AXI4 2.0E is the protocol used for this communication.

Various FPGA vendors, such as e.g. Xilinx , Intel , provide building blocks to interface internal block RAM, SRAM, or DRAM with an AXI bus. The fourth and final interface is used to configure various networking parameters and to read status info.

Performance

Following data throughput numbers have been measured on Xilinx ZC706 :

| MTU | TX | CPU (%) | RX | CPU (%) |

|---|---|---|---|---|

| 1500 | 905 | 0 | 949 | 0 |

| MTU | TX | CPU (%) | RX | CPU (%) |

|---|---|---|---|---|

| 1500 | 9.18 | 0 | 9.35 | 0 |

| 9000 | 9.69 | 0 | 9.73 | 0 |

The data throughput is thus higher for MTU=9000 (jumbo frames). CPU load is 0% since the full TCP/IP connectivity is in the FPGA (full hardware acceleration).

Following latency numbers have been measured for the 10G TOE in simulation, making use of the Xilinx transceiver models (responsible for 160 ns latency):

Resource Usage & Scalability

Our design is such that for each additional TCP socket the number of additional LUTs / ALMs is negligible, only extra memory must be foreseen.

Application Areas

Some of the main application areas are listed below. Don’t hesitate to contact us for feasibility advice of your use case.

TCP Offload engine – download PDF documentation

Want to know more about TCP/IP Offload?

Download the product PDF here.

Request a demo or evaluation kit!

Xilinx Zynq-7045 Evaluation System

- Targeted to Xilinx Zynq-7045 on ZC706

- Dual ARM Cortex-A9 core processors are not used for handling TCP/IP. They are free to be used for your application.

- SFP+ for 10GigE

- Supports Vivado design flow

- 10 GigE resource count (64-bit @ 156.25 MHz):

- Vivado 2017.1 targeting XC7Z045

- Full Stack (1 TCP socket) : 34K LUTs

- Multisocket Full Stack (4 TCP sockets) : 39K LUTs

- Runs on Xilinx ZC706 Development Kit

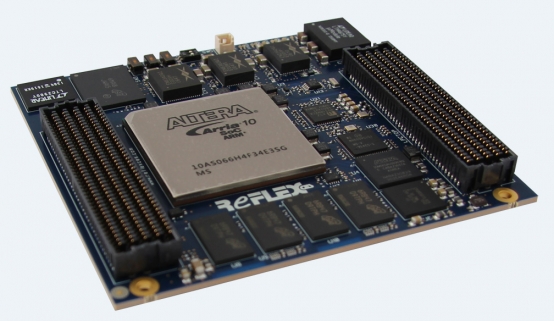

Intel Arria 10 Evaluation System

- Targeted to Intel Arria 10 SoC SoM by ReflexCES plugged in on ReflexCES’ PCIe Carrier Board Arria 10 SoC SoM

- SFP+ for 10GigE

- Supports Quartus design flow

- 10 GigE resource count (64-bit @ 156.25 MHz):

- Quartus Prime Pro 17.0 targeting 10AS027H3F34E2SG

- Full Stack (1 TCP socket) : 24K ALM

- Multisocket Full Stack (4 TCP sockets) : 27K ALM

- Runs on ReflexCES PCIe Carrier Board Arria 10 SoC SoM Development Kit

Xilinx Virtex-6 FPGA ML605 Evaluation Kit

- Targeted to Xilinx Virtex-6 on ML605

- RJ-45 for 1GigE

- Supports ISE design flow

- 1GigE resource count (32-bit @ 31.25 MHz):

- Synplify H-2013.03 targeting XC6VLX240T

- Full Stack (1 TCP engine) : 12K LUT

-

Runs on Xilinx Virtex-6 FPGA ML605 Evaluation Kit

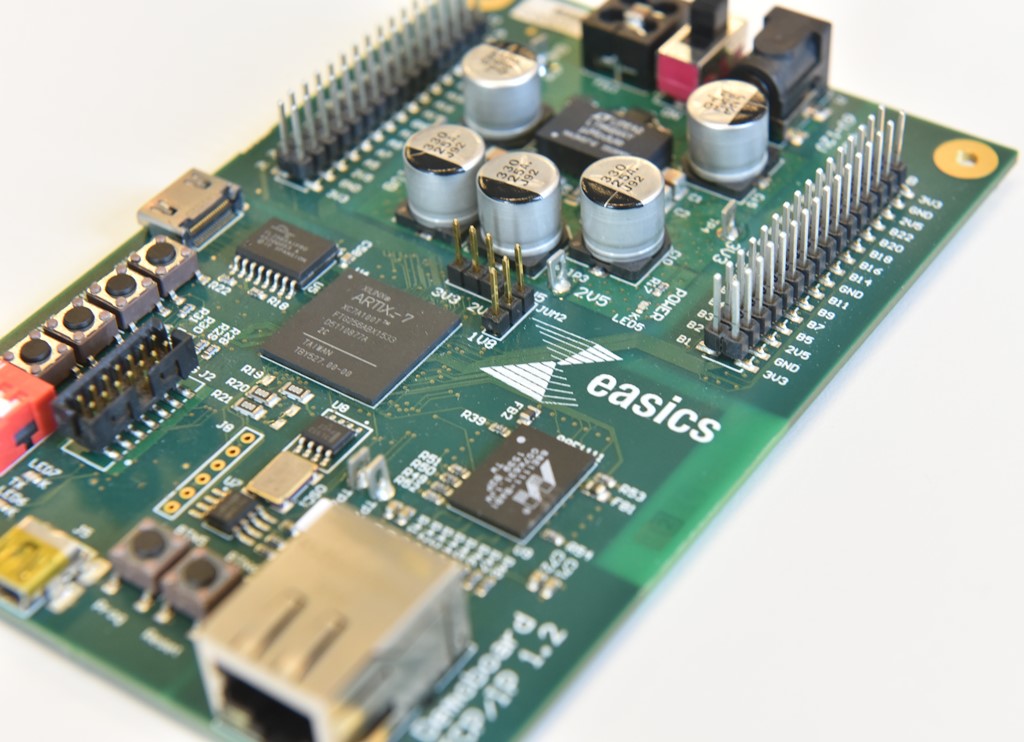

easics’ Artix-7 FPGA Demo Board

- Targeted to Xilinx Artix-7 on the easics demo board

- RJ-45 for 1GigE

- Supports Vivado design flow

- 1GigE resource count (32-bit @ 31.25 MHz):

- Vivado 2015.1 targeting XC7A100TFTG256-2

- Full Stack (1 TCP socket) : 5K LUT

-

Runs on the easics Artix-7 FPGA demo board